This Tuesday marked the last day of the coding sprints, and with that PyCon Canada 2019 ended. Now that I have wrapped up my own commitments for the conference, I feel that I should post a brief retrospective on how the conference went.

Overall the conference this year turned out very well! The organizers received a lot of praise for our hard work. And I am humbled that I can be counted as one of the core organizers. I am seriously considering helping out again next year with the sprints.

Preparation

Any larger conference takes time and energy to prepare for. PyCon Canada 2019 proved no different. Thankfully experience from last year proved valuable in getting everything to come together. As the event drew closer, we were able to stay on track with the work, through meetings, emails and messages.

If you never worked on a conference organization team, you do not realize how much work goes into a conference:

- Picking out a venue.

- Choosing an appropriate mix of talks.

- Organizing schedules and volunteers.

- Ensuring equipment runs smoothly.

- Designing promotional material, merchandise, websites, etc.

- Figuring out the catering to feed 500+ attendees.

- Promoting everything.

Thankfully we had a great team, and were able to make everything come together. Thank you Elaine Wong, and Chris Fournier for chairing this conference, and getting us all through it! Thanks to the team I focused on the sprints, and getting my employer to sponsor the conference, while others could focus on other aspects of the conference.

Venue

The Carlu proved an excellent venue for the conference. Not only a stylish, comfortable, compact (but not crowded) space but also staffed by a very professional crew. We only ran into a few minor technical difficulties, but otherwise the operation went smoothly. The catering and food was amazing, even impressing our French Canadian attendees (who are accustomed to good cuisine)! Hopefully we will be able to use the space again in the future.

Sponsors

Corporate sponsors help keep PyCon Canada reasonably priced, and in turn as an organizer, I hope the sponsors find it benefitial. A conference lets sponsors get the word out.

During the conference I ended up chatting with quite a few of the sponsors. The visual effects and animation studio DNEG was an unexpected sponsors. I ended up chatting with their reps a lot about their asset setup (in-house Python), and their work. (I am a huge nerd when it comes to computer graphics and animation.)

Also it was nice to see the other sponsors:

- Microsoft

- Tucows

- Turns out that it is a small world when it comes to former coworkers.

- Linode

- Shopify

- I didn’t know you guys also have an entreprise team too. My condolences. 😀

- Wave

- I’m super impressed that you managed to pull off having a few production ready reactive systems IRL.

- Yelp

It was great talking to all of you, and I learned a new thing.

I also appreciate all the effort that went into getting all the sponsors onboard. Getting my own employer to sponsor proved a bit more involved than I expected. Even though everyone at Points wanted to go, details of who could go, what benefits to expect from the sponsorship, which budget would be involved, etc., took a bit to figure out. I am hoping that planning for next year’s conference starts early, and because of that the sponsorship experience goes more smoothly.

Talks

I enjoyed our selection of talks this year! And I heard similar sentiments from other attendees. Unforunately as a speaker and organizer, I did not get to see many talks, these are the ones really stood out for me:

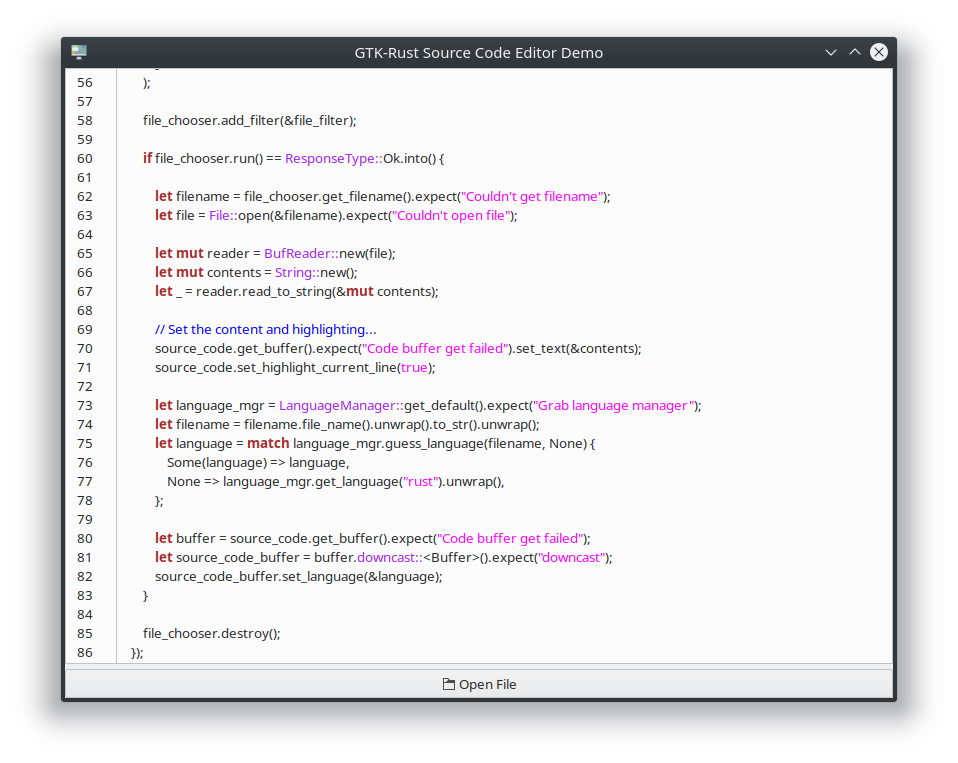

I also presented my talk Rust Accelerated Python at PyCon Canada this year. It went very well, thank you to both Elaine and the session chair for making the session go well. I found the setup for the slides vs. speaker notes a bit fiddly, but not terribly. Otherwise my edits to make the talk more concise went well, and I had time for a QA! Overall I got a lot of interest in both Rust and creating bindings in Python. Ended up fielding quite a few questions about the state of the Rust ecosystem, how useful would it be to learn Rust for data science, etc. I am super happy that was able to both educate and entertain, and enjoy doing so!

You can view the talk slides here and the associated BitBucket repo here.

Sprints

Finally the coding sprints went very well. Apart from some minor organizational snafus that almost noone noticed, and that I plan on improving for next year, the sprints went very well. We got a good mix of both experienced and novice developers come out. People sprint on around 8-10 projects including: pyodide, graphene, pylint, commitzen, flask and statsmodels. Overall people enjoyed the sprints, and many of the first time sprinters expressed wanting to sprint again!

I personally I got to work on the pyodide project, once everyone was settled in:

Thanks to Mozilla Toronto for providing the space and hosting us! And thanks again to Elaine with making sure we had coffee, sweets and pizza to fuel the sprinters!